Horn, H., Horn, W., Paul, L., Uhlmann, D., & Röske, I. (2006). Drei Jahrzehnte kontinuierliche Untersuchungen an der Talsperre Saidenbach: Fakten, Zusammenhänge, Trends. Abschlussbericht zum Projekt "Langzeitstabilität der biologischen Struktur von Talsperren-Ökosystemen" der Arbeitsgruppe "Limnologie von Talsperren" der Sächsischen Akademie der Wissenschaften zu Leipzig (pp. 1–178). Verlag Dr. Uwe Miersch, Ossling.

Keeling, C. D., Piper, S. C., Bacastow, R. B., Wahlen, M., Whorf, T. P., Heimann, M., & Meijer, H. A. (2001). Exchanges of atmospheric CO2 and 13CO2 with the terrestrial biosphere and oceans from 1978 to 2000. I. Global aspects (p. 88). Scripps Institution of Oceanography, San Diego.

Kleiber, C., & Zeileis, A. (2008). Applied econometrics with R. Springer.

Kronenberg, F., R. (2021).

Das regionale klimainformationssystem ReKIS – eine gemeinsame plattform für sachsen, sachsen-anhalt und thüringen.

https://rekis.hydro.tu-dresden.de/

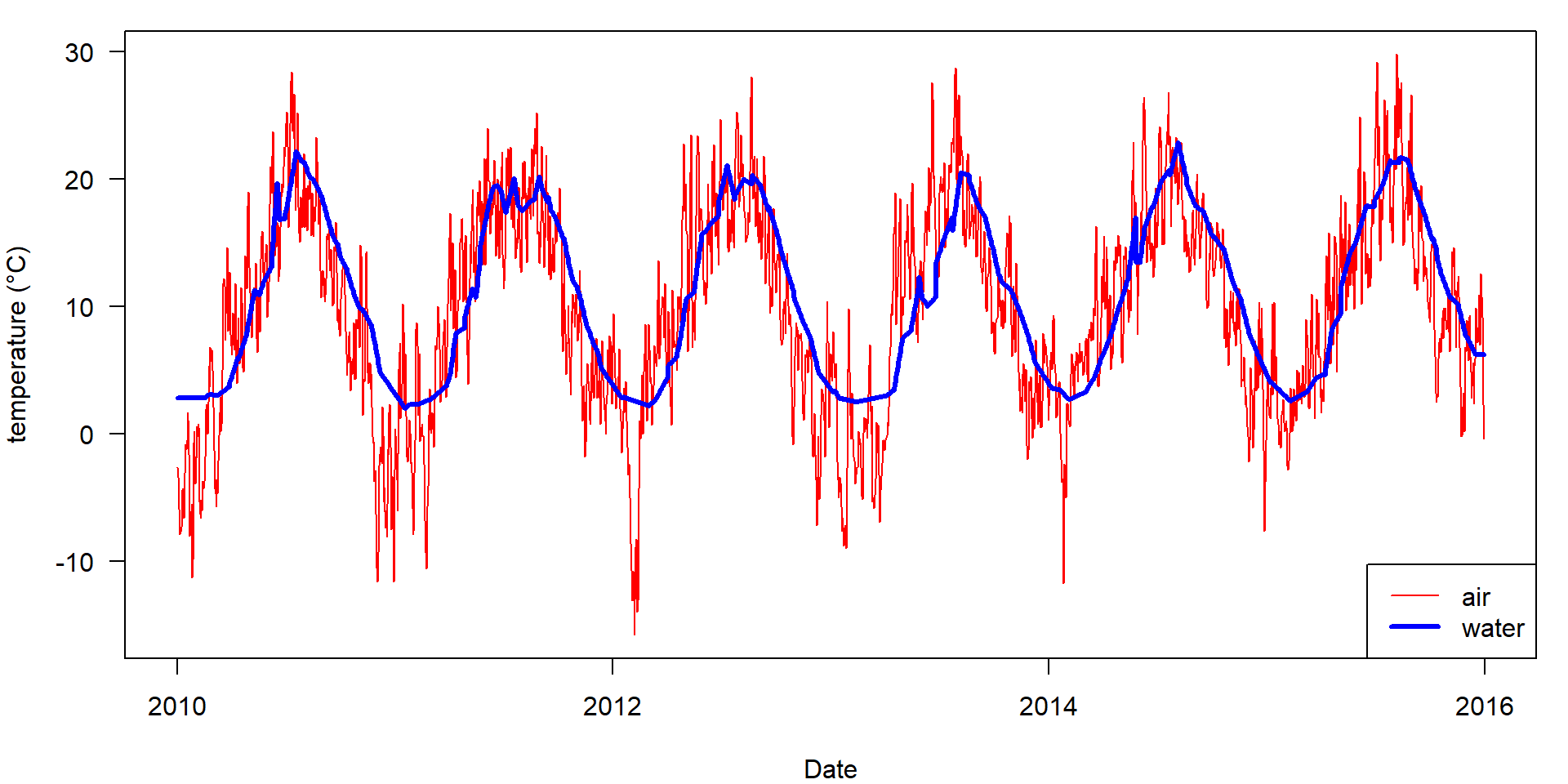

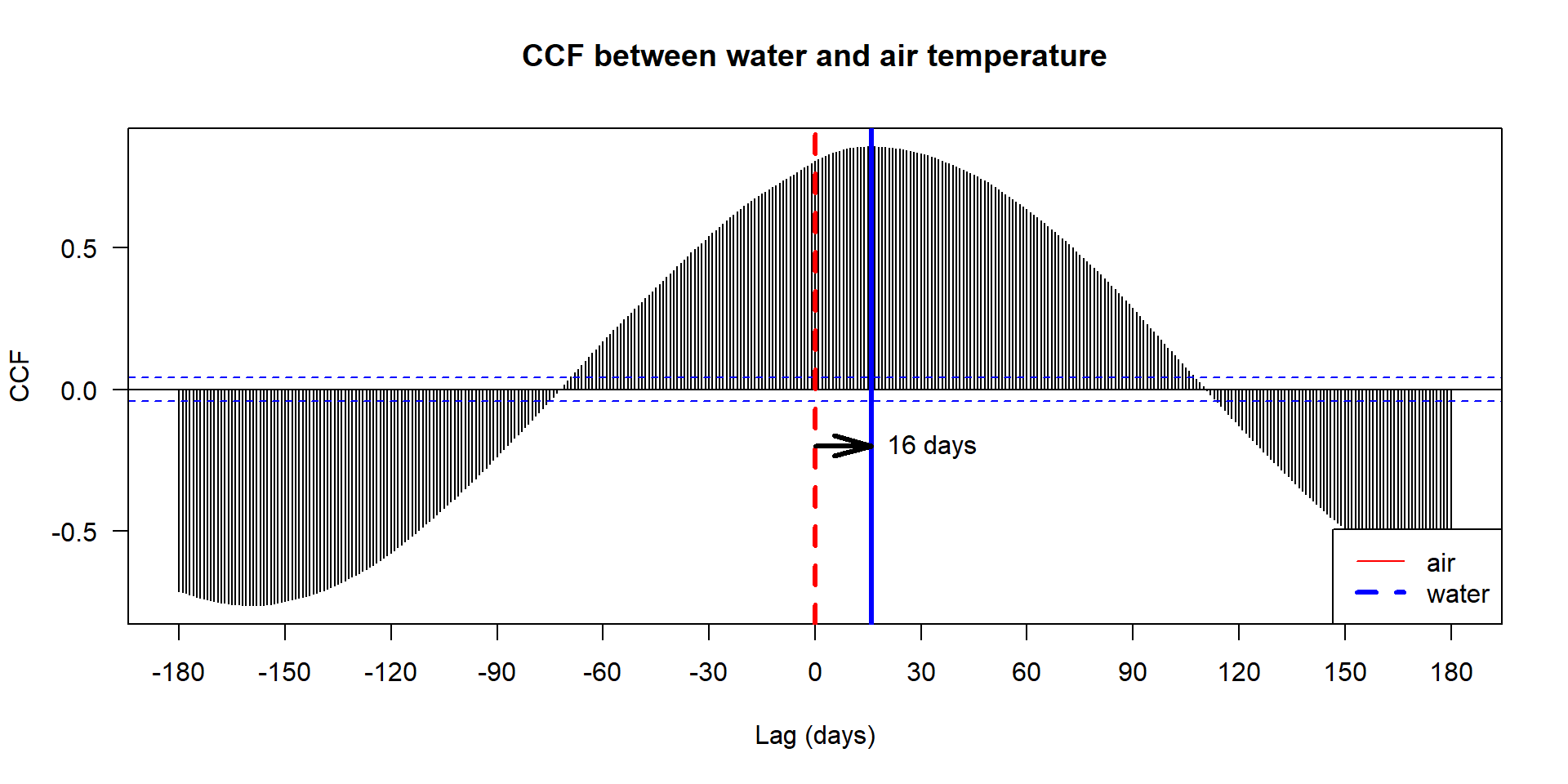

Paul, L., Horn, H., & W., H. (2020).

Saidenbach reservoir in situ and Saidenbach reservoir inlets. Data packages 20, 23 and 177 from the IGB freshwater research and environmental database (FRED). Available according to the ODB CC-BY license. https://fred.igb-berlin.de

Shumway, R. H., & Stoffer, D. S. (2000). Time series analysis and its applications (3rd ed.). Springer.

Shumway, R. H., & Stoffer, D. S. (2019). Time series: A data analysis approach using r. CRC Press.

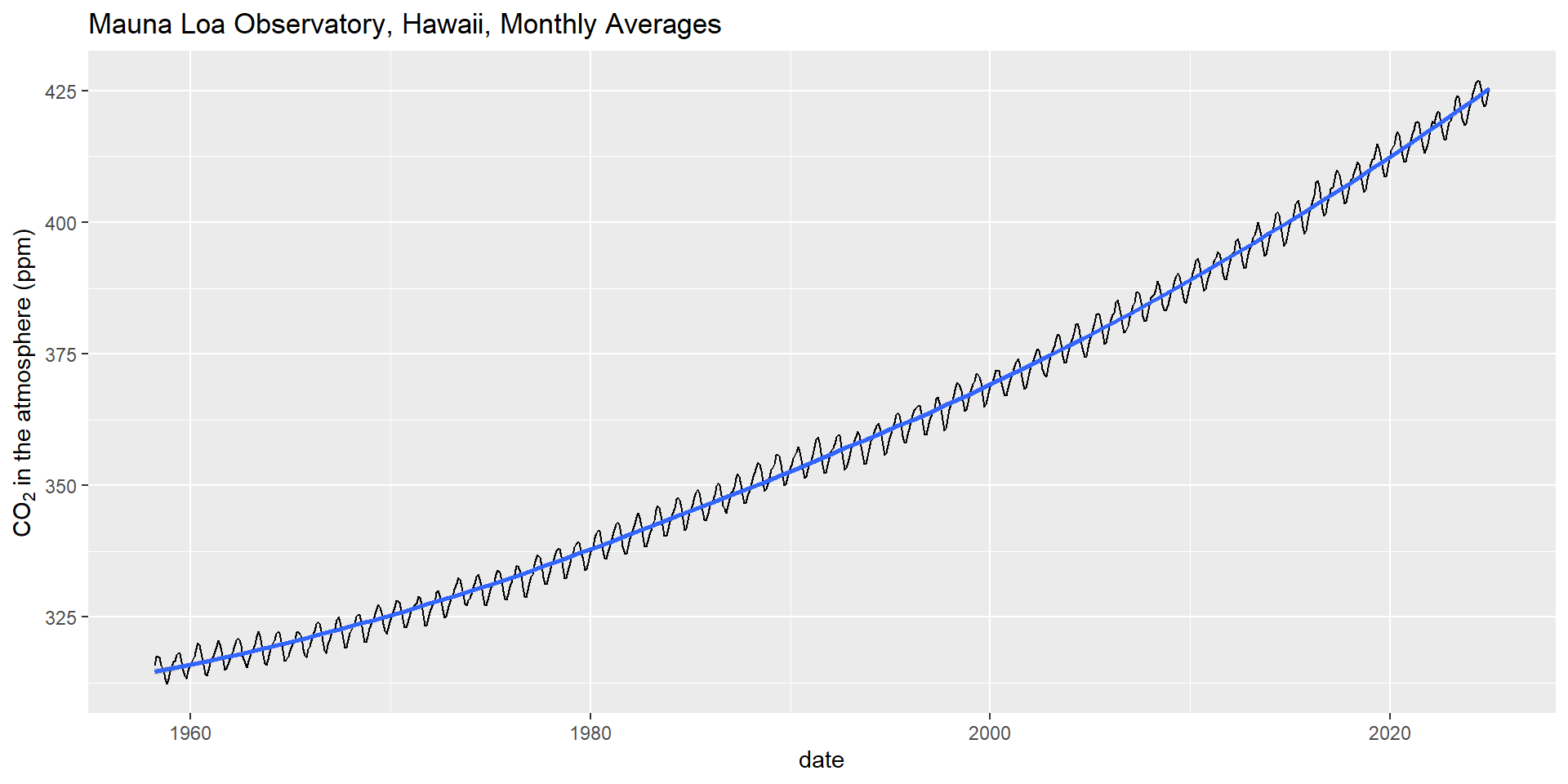

Tans, P., & Keeling, C. D. (2023).

Trends in atmospheric carbon dioxide, Mauna Loa CO2 monthly mean data. NOAA Earth System Research Laboratories, Global Monitoring Laboratory.

https://gml.noaa.gov/ccgg/trends/data.html